Snowflake-Arctic-Embed-m-v1.5 Released: A 109M Parameters Groundbreaking Text Embedding Model with Enhanced Compression and Performance Capabilities

Snowflake recently announced the release of its updated text embedding model, snowflake-arctic-embed-m-v1.5. This model generates highly compressible embedding vectors while maintaining high performance. The model’s most noteworthy feature is its ability to produce embedding vectors compressed to as small as 128 bytes per vector without significantly losing quality. This is achieved through Matryoshka Representation Learning (MRL) and uniform scalar quantization. These techniques enable the model to retain most of its retrieval quality even at this high compression level, a critical advantage for applications requiring efficient storage and fast retrieval.

The snowflake-arctic-embed-m-v1.5 model builds upon its predecessors by incorporating improvements in the architecture and training process. Originally released on April 16, 2024, the snowflake-arctic-embed family of models has been designed to improve embedding vector compressibility while achieving slightly higher overall performance. The updated version, v1.5, continues this trend with enhancements that make it particularly suitable for resource-constrained environments where storage and computational efficiency are paramount.

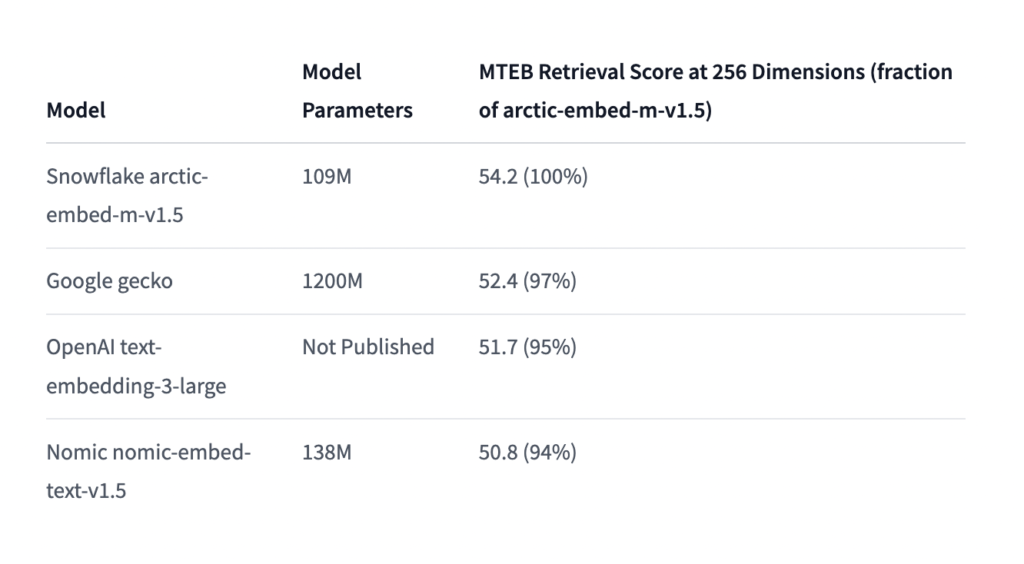

Evaluation results of snowflake-arctic-embed-m-v1.5 show that it maintains high-performance metrics across various benchmarks. For instance, the model achieves a mean retrieval score of 55.14 on the MTEB (Massive Text Embedding Benchmark) Retrieval benchmark when using 256-dimensional vectors, surpassing several other models trained with similar objectives. Compressed to 128 bytes, it still retains a commendable retrieval score of 53.7, demonstrating its robustness even under significant compression.

The model’s technical specifications reveal a design that emphasizes efficiency and compatibility. It consists of 109 million parameters and utilizes 256-dimensional vectors by default, which can be further truncated and quantized for specific use cases. This adaptability makes it an attractive option for applications, from search engines to recommendation systems, where efficient text processing is crucial.

Snowflake Inc. has also provided comprehensive usage instructions for the snowflake-arctic-embed-m-v1.5 model. Users can implement the model using popular frameworks like Hugging Face’s Transformers and Sentence Transformers libraries. Example code snippets illustrate how to load the model, generate embeddings, and compute similarity scores between text queries and documents. These instructions facilitate easy integration into existing NLP pipelines, allowing users to leverage the model’s capabilities with minimal overhead.

In terms of deployment, snowflake-arctic-embed-m-v1.5 can be used in various environments, including serverless inference APIs and dedicated inference endpoints. This flexibility ensures that the model can be scaled according to the specific needs and infrastructure of the user, whether they are operating on a small-scale or a large enterprise-level application.

In conclusion, as Snowflake Inc. continues to refine and expand its offerings in text embeddings, the snowflake-arctic-embed-m-v1.5 model stands out as a testament to its expertise and vision. Addressing the critical needs for compression and text embedding performance underscores the company’s commitment to advancing state-of-the-art text embedding technology, providing powerful tools for efficient and effective text processing. The model’s innovative design and high performance make it a valuable asset for developers & researchers seeking to enhance their applications with cutting-edge NLP capabilities.

Check out the Paper and HF Model Card. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 46k+ ML SubReddit

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.