RAGLAB: A Comprehensive AI Framework for Transparent and Modular Evaluation of Retrieval-Augmented Generation Algorithms in NLP Research

Retrieval-Augmented Generation (RAG) has faced significant challenges in development, including a lack of comprehensive comparisons between algorithms and transparency issues in existing tools. Popular frameworks like LlamaIndex and LangChain have been criticized for excessive encapsulation, while lighter alternatives such as FastRAG and RALLE offer more transparency but lack reproduction of published algorithms. AutoRAG, LocalRAG, and FlashRAG have attempted to address various aspects of RAG development, but still fall short in providing a complete solution.

The emergence of novel RAG algorithms like ITER-RETGEN, RRR, and Self-RAG has further complicated the field, as these algorithms often lack alignment in fundamental components and evaluation methodologies. This lack of a unified framework has hindered researchers’ ability to accurately assess improvements and select appropriate algorithms for different contexts. Consequently, there is a pressing need for a comprehensive solution that addresses these challenges and facilitates the advancement of RAG technology.

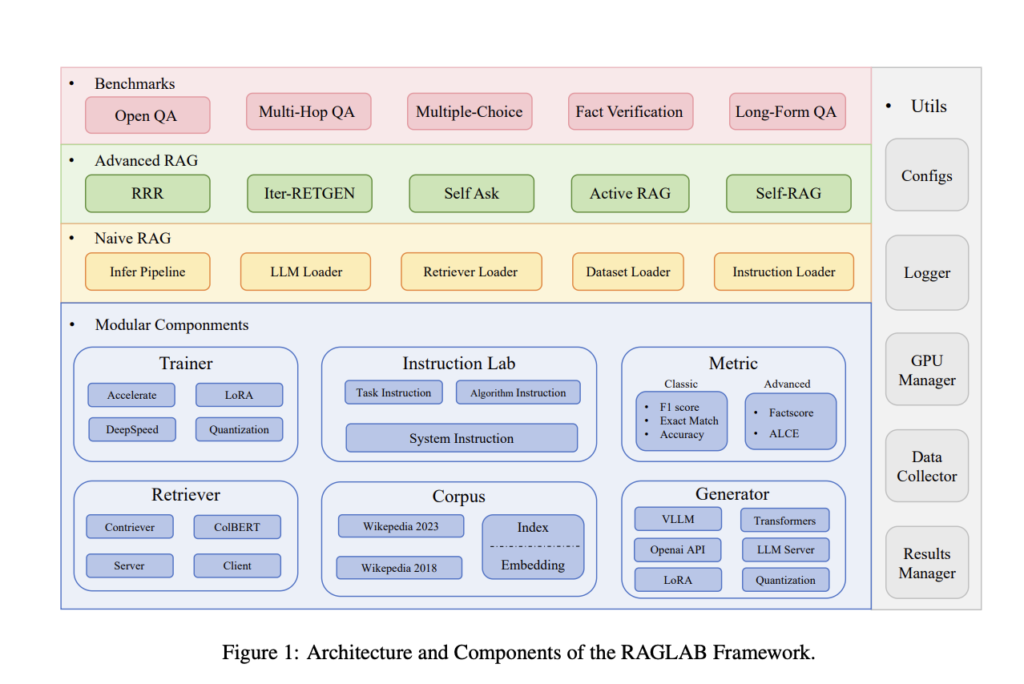

The researchers addressed critical issues in RAG research by introducing RAGLAB and providing a comprehensive framework for fair algorithm comparisons and transparent development. This modular, open-source library reproduces six existing RAG algorithms and enables efficient performance evaluation across ten benchmarks. The framework simplifies new algorithm development and promotes advancements in the field by addressing the lack of a unified system and the challenges posed by inaccessible or complex published works.

The modular architecture of RAGLAB facilitates fair algorithm comparisons and includes an interactive mode with a user-friendly interface, making it suitable for educational purposes. By standardising key experimental variables such as generator fine-tuning, retrieval configurations, and knowledge bases, RAGLAB ensures comprehensive and equitable comparisons of RAG algorithms. This approach aims to overcome the limitations of existing tools and foster more effective research and development in the RAG domain.

RAGLAB employs a modular framework design, enabling easy assembly of RAG systems using core components. This approach facilitates component reuse and streamlines development. The methodology simplifies new algorithm implementation by allowing researchers to override the infer() method while utilizing provided components. Configuration of RAG methods follows optimal values from original papers, ensuring fair comparisons across algorithms.

The framework conducts systematic evaluations across multiple benchmarks, assessing six widely used RAG algorithms. It incorporates a limited set of evaluation metrics, including three classic and two advanced metrics. RAGLAB’s user-friendly interface minimizes coding effort, allowing researchers to focus on algorithm development. This methodology emphasizes modular design, straightforward implementation, fair comparisons, and usability to advance RAG research.

Experimental results revealed varying performance among RAG algorithms. The selfrag-llama3-70B model significantly outperformed other algorithms across 10 benchmarks, while the 8B version showed no substantial improvements. Naive RAG, RRR, Iter-RETGEN, and Active RAG demonstrated comparable effectiveness, with Iter-RETGEN excelling in Multi-HopQA tasks. RAG systems generally underperformed compared to direct LLMs in multiple-choice questions. The study employed diverse evaluation metrics, including Factscore, ACLE, accuracy, and F1 score, to ensure robust algorithm comparisons. These findings highlight the impact of model size on RAG performance and provide valuable insights for natural language processing research.

In conclusion, RAGLAB emerges as a significant contribution to the field of RAG, offering a comprehensive and user-friendly framework for algorithm evaluation and development. This modular library facilitates fair comparisons among diverse RAG algorithms across multiple benchmarks, addressing a critical need in the research community. By providing a standardized approach for assessment and a platform for innovation, RAGLAB is poised to become an essential tool for natural language processing researchers. Its introduction marks a substantial step forward in advancing RAG methodologies and fostering more efficient and transparent research in this rapidly evolving domain.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 49k+ ML SubReddit

Find Upcoming AI Webinars here

Shoaib Nazir is a consulting intern at MarktechPost and has completed his M.Tech dual degree from the Indian Institute of Technology (IIT), Kharagpur. With a strong passion for Data Science, he is particularly interested in the diverse applications of artificial intelligence across various domains. Shoaib is driven by a desire to explore the latest technological advancements and their practical implications in everyday life. His enthusiasm for innovation and real-world problem-solving fuels his continuous learning and contribution to the field of AI