PyramidInfer: Allowing Efficient KV Cache Compression for Scalable LLM Inference

LLMs like GPT-4 excel in language comprehension but struggle with high GPU memory usage during inference, limiting their scalability for real-time applications like chatbots. Existing methods reduce memory by compressing the KV cache but overlook inter-layer dependencies and pre-computation memory demands. Inference memory usage primarily comes from model parameters and the KV cache, with the latter consuming significantly more memory. For instance, a 7 billion parameter model uses 14 GB for parameters but 72 GB for the KV cache. This substantial memory requirement restricts the throughput of LLM inference on GPUs.

Researchers from Shanghai Jiao Tong University, Xiaohongshu Inc., and South China University of Technology developed PyramidInfer, which enhances LLM inference by compressing the KV cache. Unlike existing methods that overlook inter-layer dependencies and the memory demands of pre-computation, PyramidInfer retains only crucial context keys and values layer-by-layer. Inspired by recent tokens’ consistency in attention weights, this approach significantly reduces GPU memory usage. Experiments show PyramidInfer improves throughput by 2.2x and reduces KV cache memory by over 54% compared to existing methods, demonstrating its effectiveness across various tasks and models.

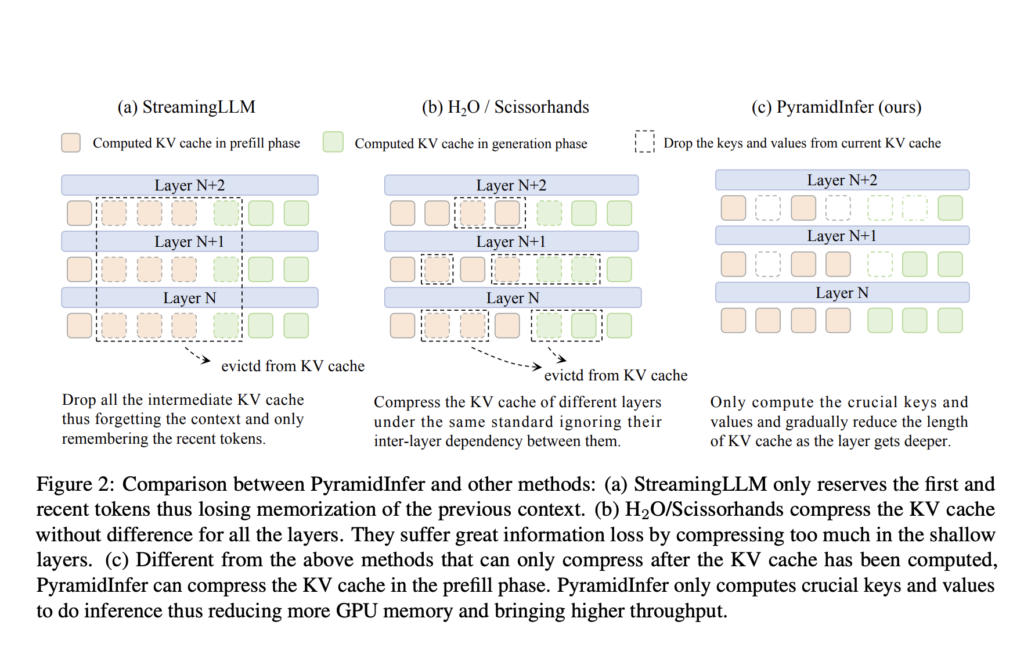

Efficient strategies are essential to handle the growing demand for chatbot queries, aiming to maximize throughput by leveraging GPU parallelism. One approach is increasing GPU memory through pipeline parallelism and KV cache offload, utilizing multiple GPUs or RAM. For limited GPU memory, reducing the KV cache is another option. Techniques like FlashAttention 2 and PagedAttention minimize memory waste by optimizing CUDA operations. Methods such as StreamingLLM, H2O, and Scissorhands compress the KV cache by focusing on recent context or attention mechanisms but overlook layer differences and prefill phase compression. PyramidInfer addresses these gaps by considering layer-specific compression in both phases.

Verification of the Inference Context Redundancy (ICR) and Recent Attention Consistency (RAC) hypotheses inspired the design of PyramidInfer. ICR posits that many context keys and values are redundant during inference and are only necessary in training to predict the next token. Experiments with a 40-layer LLaMA 2-13B model revealed that deeper layers have higher redundancy, allowing for significant KV cache reduction without affecting output quality. RAC confirms that certain keys and values are consistently attended by recent tokens, enabling the selection of pivotal contexts (PVCs) for efficient inference. PyramidInfer leverages these insights to compress the KV cache effectively in both prefill and generation phases.

PyramidInfer’s performance was evaluated across various tasks and models, demonstrating significant reductions in GPU memory usage and increased throughput while maintaining generation quality. The evaluation included language modeling on wikitext-v2, LLM benchmarks like MMLU and BBH, mathematical reasoning with GSM8K, coding via HumanEval, conversation handling with MT-Bench, and long text summarization using LEval. PyramidInfer was tested on models such as LLaMA 2, LLaMA 2-Chat, Vicuna 1.5-16k, and CodeLLaMA across different sizes. Results showed that PyramidInfer effectively maintained generation quality with less GPU memory than full cache methods and significantly outperformed local strategies.

In conclusion, PyramidInfer introduces an efficient method to compress the KV cache during both prefill and generation phases, inspired by ICR and RAC. This approach significantly reduces GPU memory usage without compromising model performance, making it ideal for deploying large language models in resource-constrained environments. Despite its effectiveness, PyramidInfer requires additional computation, limiting speedup with small batch sizes. As the first to compress the KV cache in the prefill phase, PyramidInfer is yet to be a lossless method, indicating potential for future improvements in this area.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

![]()

Sana Hassan, a consulting intern at Marktechpost and dual-degree student at IIT Madras, is passionate about applying technology and AI to address real-world challenges. With a keen interest in solving practical problems, he brings a fresh perspective to the intersection of AI and real-life solutions.