Google AI Introduces Proofread: A Novel Gboard Feature Enabling Seamless Sentence-Level And Paragraph-Level Corrections With A Single Tap

Gboard, Google’s mobile keyboard app, operates on the principle of statistical decoding. This approach is necessary due to the inherent inaccuracy of touch input, often referred to as the ‘fat finger’ problem, on small screens. Studies have shown that without decoding, the error rate for each letter can be as high as 8 to 9 percent. To ensure a smooth typing experience, Gboard incorporates a variety of error correction features. Some of these features are active and automatic, while others require the user to take additional manual actions and make selections.

Word completion, next-word predictions, active auto-correction (AC), and active key correction (KC) all work together to make it easier for the user to type by correcting errors and offering multiple word candidates in the suggestion bar or inline, as well as smart compose. Fixing errors in the last one or more committed words is supported via post-correction (PC).

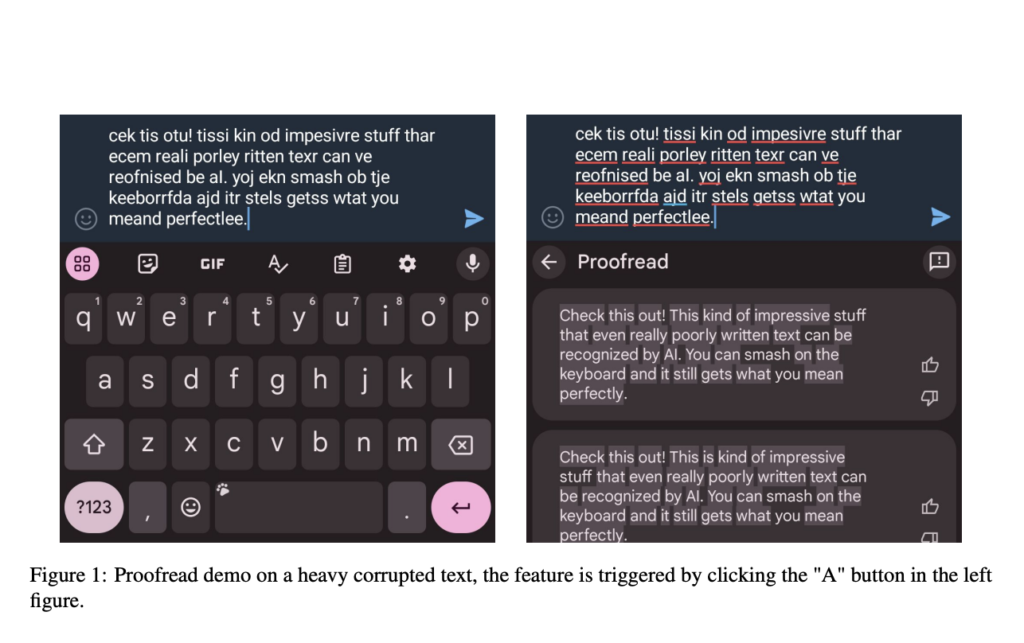

When it comes to user experience, the current methods of rectification in Gboard have two distinct limitations. First, the on-device correction models like active key correction (KC), active auto-correction (AC), and post-correction (PC) are compact and quick, but they struggle with more complex errors that require longer-span contexts. As a result, users still need to type slowly and accurately to avoid triggering these models. Additionally, users must systematically repair the words they commit using grammar and spell checkers, two of the multi-step passive correction capabilities. This process can be mentally and visually demanding, as users have to carefully monitor their words and correct mistakes sequentially after committing. This can lead to a decrease in typing speed. A common strategy among Gboard users who type quickly is to ignore the words they’ve already typed and concentrate solely on the keyboard. People who are ‘fast and sloppy’ when they type and then transition to higher-level error corrections sometimes ask for a sentence or higher-level correction function to help them.

A new feature called Proofread has been introduced in a recent Google study. This feature is designed to address the most common complaints of quick typers, providing a significant boost to their productivity. It offers sentence-level and paragraph-level issue repairs with a single press, making it easier for users to correct errors in their text. The field of Grammatical Error Correction (GEC), which includes proofreading, has a rich history of study spanning rule-based solutions, statistical methods, and neural network models. Large Language Models (LLMs) have an incredible capacity for growth, which presents a fresh chance to find high-quality corrections for sentence-level grammar.

The system behind the Proofread feature is composed of four main components: data production, metrics design, model tweaking, and model serving. These components work together to ensure the feature’s effectiveness. Several procedures are performed to guarantee that the data distribution is as close to the Gboard domain as possible. This is achieved through a meticulously constructed error synthetic architecture that incorporates commonly made keyboard mistakes to mimic the users’ input. Researchers have included several measures covering different aspects to evaluate the model further. Since the answers are never truly unique, especially for lengthy examples, the metric is seen as the most important statistic for comparing the quality of the model, together with the grammar mistake existence check and the same meaning check based on LLMs. Finally, to get the LLM dedicated to the proofreading feature, they applied the InstructGPT approach of using Supervised Fine-tuning followed by Reinforcement Learning (RL) tuning. It was found that the proposed formula for reinforcing learning and tailoring rewrite tasks greatly enhanced the foundation models’ proofreading performance. They construct their feature on top of the medium-sized LLM PaLM2-XS, which can be accommodated in a single TPU v5 following 8-bit quantization to lower the serving cost.

Previous studies show that latency improves even more by using segmentation, speculative decoding, and bucket keys. Now that the proposed model is live, tens of thousands of Pixel 8 consumers will reap the benefits. Careful production of synthetic data, many phases of supervised fine-tuning, and RL tuning allow us to achieve a high-quality model. Researchers suggest the Global Reward and Direct Reward in the RL tuning stage, which greatly enhances the model. The results demonstrate that RL tuning can effectively decrease grammar errors, leading to a 5.74 percent relative reduction in the Bad ratio of the PaLM2-XS model. After optimizing the model using quantization, buckets, input segmentation, and speculative decoding, they deploy it to TPU v5 in the cloud with highly optimized latency. Based on the findings, speculative decoding lowered the median latency by 39.4 percent.

This study not only demonstrates the groundbreaking nature of LLMs in improving UX but also opens up a world of exciting possibilities for future research. Using real-user data, adapting to several languages, providing personalized support for different writing styles, and developing solutions that protect privacy on devices are all areas that could be explored, sparking new ideas and innovations in the field.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 44k+ ML SubReddit

Dhanshree Shenwai is a Computer Science Engineer and has a good experience in FinTech companies covering Financial, Cards & Payments and Banking domain with keen interest in applications of AI. She is enthusiastic about exploring new technologies and advancements in today’s evolving world making everyone’s life easy.