DeBaTeR: A New AI Method that Leverages Time Information in Neural Graph Collaborative Filtering to Enhance both Denoising and Prediction Performance

Recommender systems have been widely applied for studying user preferences; however, they face significant challenges in accurately capturing user preferences, particularly in the context of neural graph collaborative filtering. While these systems use interaction histories between users and items through Graph Neural Networks (GNNs) to mine latent information and capture high-order interactions, the quality of collected data poses a major obstacle. Moreover, malicious attacks that introduce fake interactions further deteriorate the recommendation quality. This challenge becomes acute in graph neural collaborative filtering, where the message-passing mechanism of GNNs amplifies the impact of these noisy interactions, leading to misaligned recommendations that fail to reflect users’ interests.

Existing attempts to address these challenges mainly focus on two approaches: denoising recommender systems and time-aware recommender systems. Denoising methods utilize various strategies, such as identifying and down-weighting interactions between dissimilar users and items, pruning samples with larger losses during training, and using memory-based techniques to identify clean samples. Time-aware systems are extensively used in sequential recommendations but have limited application in collaborative filtering contexts. Most temporal approaches concentrate on incorporating timestamps into sequential models or constructing item-item graphs based on temporal order but fail to address the complex interplay between temporal patterns and noise in user interactions.

Researchers from the University of Illinois at Urbana-Champaign USA and Amazon USA have proposed DeBaTeR, a novel approach for denoising bipartite temporal graphs in recommender systems. The method introduces two distinct strategies: DeBaTeR-A and DeBaTeR-L. The first strategy, DeBaTeR-A, focuses on reweighting the adjacency matrix using a reliability score derived from time-aware user and item embeddings, implementing both soft and hard assignment mechanisms to handle noisy interactions. The second strategy, DeBaTeR-L, employs a weight generator that utilizes time-aware embeddings to identify and down-weight potentially noisy interactions in the loss function.

A comprehensive evaluation framework is utilized to evaluate DeBaTeR’s predictive performance and denoising capabilities with vanilla and artificially noisy datasets to ensure robust testing. For vanilla datasets, specific filtering criteria are applied to retain only high-quality interactions (ratings ≥ 4 for Yelp and ≥ 4.5 for Amazon Movies and TV) from users and items with substantial engagement (>50 reviews). The datasets are split using a 7:3 ratio for training and testing, with noisy variations created by introducing 20% random interactions into the training sets. The evaluation framework uses temporal aspects by using the earliest test set timestamp as the query time for each user, with results averaged across four experimental rounds.

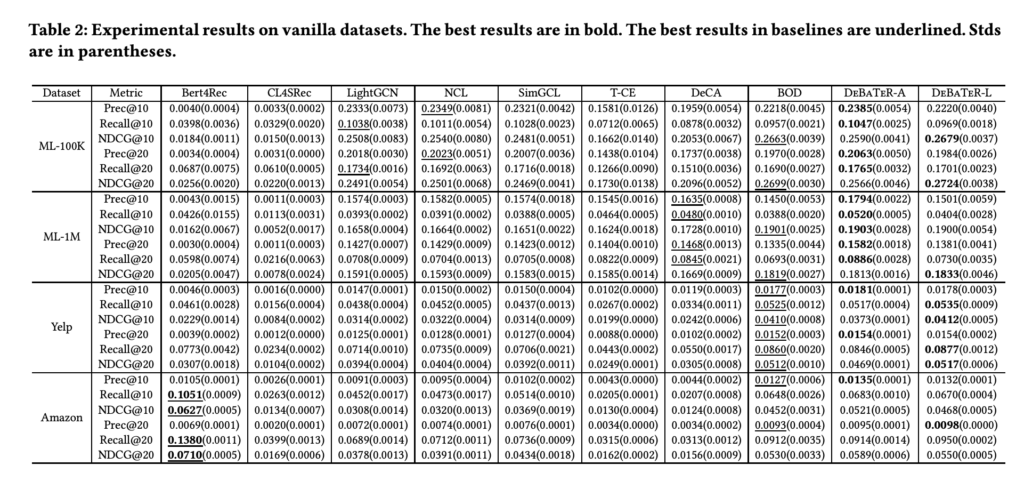

The experimental results for the question “How does the proposed approach perform compared to state-of-the-art denoising and general neural graph collaborative filtering methods?” demonstrate the superior performance of both DeBaTeR variants across multiple datasets and metrics. DeBaTeR-L achieves higher NDCG scores, making it more suitable for ranking tasks, while DeBaTeR-A shows better precision and recall metrics, indicating its effectiveness for retrieval tasks. Moreover, DeBaTeR-L demonstrates enhanced robustness when dealing with noisy datasets, outperforming DeBaTeR-A across more metrics compared to their performance on vanilla datasets. The relative improvements against seven baseline methods are significant, confirming the effectiveness of both proposed approaches.

In this paper, researchers introduced DeBaTeR, an innovative approach to address noise in recommender systems through time-aware embedding generation. The method’s dual strategies – DeBaTeR-A for adjacency matrix reweighting and DeBaTeR-L for loss function reweighting provide flexible solutions for different recommendation scenarios. The framework’s success lies in its integration of temporal information with user/item embeddings, shown through extensive experimentation on real-world datasets. Future research directions point toward exploring additional time-aware neural graph collaborative filtering algorithms and expanding the denoising capabilities to include user profiles and item attributes.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[FREE AI WEBINAR] Implementing Intelligent Document Processing with GenAI in Financial Services and Real Estate Transactions– From Framework to Production

Sajjad Ansari is a final year undergraduate from IIT Kharagpur. As a Tech enthusiast, he delves into the practical applications of AI with a focus on understanding the impact of AI technologies and their real-world implications. He aims to articulate complex AI concepts in a clear and accessible manner.