Agent Symbolic Learning: An Artificial Intelligence AI Framework for Agent Learning that Jointly Optimizes All Symbolic Components within an Agent System

Large language models (LLMs) have revolutionized the field of artificial intelligence, enabling the creation of language agents capable of autonomously solving complex tasks. However, the development of these agents faces significant challenges. The current approach involves manually decomposing tasks into LLM pipelines, with prompts and tools stacked together. This process is labor-intensive and engineering-centric, limiting the adaptability and robustness of language agents. The complexity of this manual customization makes it nearly impossible to optimize language agents on diverse datasets in a data-centric manner, hindering their versatility and applicability to new tasks or data distributions. Researchers are now seeking ways to transition from this engineering-centric approach to a more data-centric learning paradigm for language agent development.

Prior studies have attempted to address language agent optimization challenges through automated prompt engineering and agent optimization methods. These approaches fall into two categories: prompt-based and search-based. Prompt-based methods optimize specific components within an agent pipeline, while search-based approaches find optimal prompts or nodes in a combinatory space. However, these methods have limitations, including difficulty with complex real-world tasks and a tendency towards local optima. They cannot also holistically optimize the entire agent system. Other research directions, such as synthesizing data for LLM fine-tuning and exploring inter-task transfer learning, show promise but don’t fully address the need for comprehensive agent system optimization.

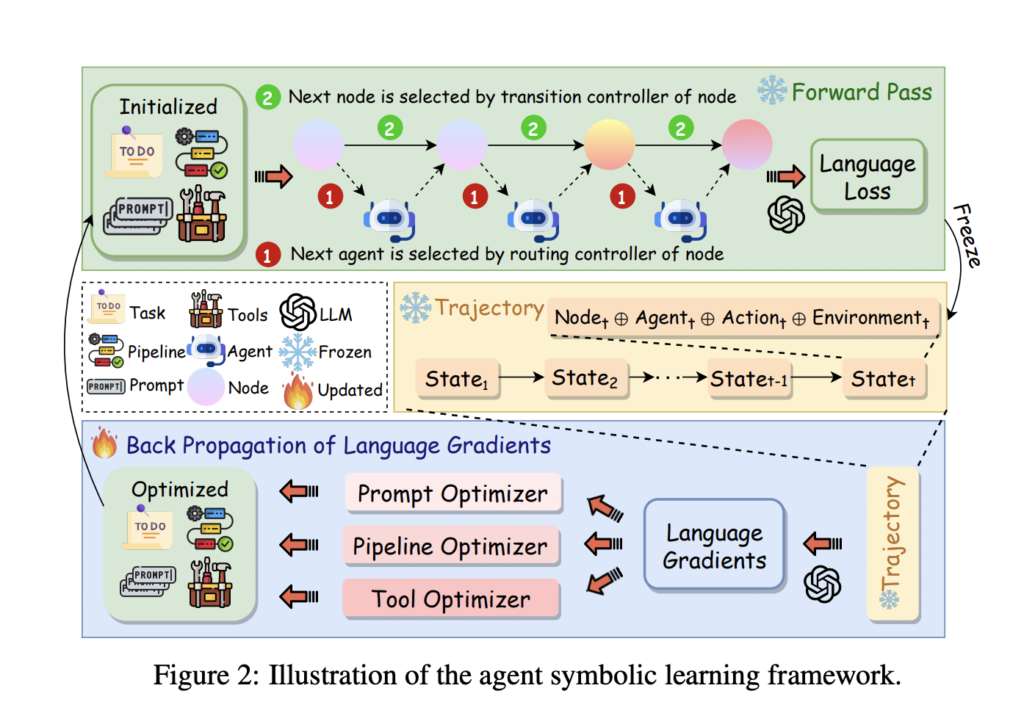

Researchers from AIWaves Inc. introduce agent symbolic learning framework as an innovative approach for training language agents that draws inspiration from neural network learning. This framework draws an analogy between language agents and neural nets, mapping agent pipelines to computational graphs, nodes to layers, and prompts and tools to weights. It maps agent components to neural network elements, enabling a process akin to backpropagation. The framework executes the agent, evaluates performance using a “language loss,” and generates “language gradients” through back-propagation. These gradients guide the comprehensive optimization of all symbolic components, including prompts, tools, and the overall pipeline structure. This approach avoids local optima, enables effective learning for complex tasks, and supports multi-agent systems. It allows for self-evolution of agents post-deployment, potentially shifting language agent research from engineering-centric to data-centric.

The agent symbolic learning framework introduces a unique approach to training language agents, inspired by neural network learning processes. This framework maps agent components to neural network elements, enabling a process similar to backpropagation. The key components include:

Agent Pipeline: Represents the sequence of nodes processing input data.

Nodes: Individual steps within the pipeline, similar to neural network layers.

Trajectory: Stores information during the forward pass for gradient back-propagation.

Language Loss: Textual measure of discrepancy between expected and actual outcomes.

Language Gradient: Textual analyses for updating the agent components.

The learning procedure involves a forward pass, language loss computation, back-propagation of language gradients, and gradient-based updates using symbolic optimizers. These optimizers include PromptOptimizer, ToolOptimizer, and PipelineOptimizer, each designed to update specific components of the agent system. The framework also supports batched training for more stable optimization.

The agent symbolic learning framework demonstrates superior performance across LLM benchmarks, software development, and creative writing tasks. It consistently outperforms other methods, showing significant improvements on complex benchmarks like MATH. In software development and creative writing, the framework’s performance gap widens further, surpassing specialized algorithms and frameworks. Its success stems from the comprehensive optimization of the entire agent system, effectively discovering optimal pipelines and prompts for each step. The framework shows robustness and effectiveness in optimizing language agents for complex, real-world tasks where traditional methods struggle, highlighting its potential to advance language agent research and applications.

The agent symbolic learning framework introduces an innovative approach to language agent optimization. Inspired by connectionist learning, it jointly optimizes all symbolic components within an agent system using language-based loss, gradients, and optimizers. This enables agents to effectively handle complex real-world tasks and self-evolve after deployment. Experiments demonstrate its superiority across various task complexities. By shifting from model-centric to data-centric agent research, this framework represents a significant step towards artificial general intelligence. The open-sourcing of code and prompts aims to accelerate progress in this field, potentially revolutionizing language agent development and applications.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 46k+ ML SubReddit

Find Upcoming AI Webinars here

Asjad is an intern consultant at Marktechpost. He is persuing B.Tech in mechanical engineering at the Indian Institute of Technology, Kharagpur. Asjad is a Machine learning and deep learning enthusiast who is always researching the applications of machine learning in healthcare.