PromSec: An AI Algorithm for Prompt Optimization for Secure and Functioning Code Generation Using LLM

Software development has benefited greatly from using Large Language Models (LLMs) to produce high-quality source code, mainly because coding tasks now take less time and money to complete. However, despite these advantages, LLMs frequently produce code that, although functional, frequently has security flaws, according to both current research and real-world assessments. This constraint results from the fact that these models are trained on enormous volumes of open-source data, which frequently uses coding techniques that are unsafe or ineffective. Because of this, even while LLMs are capable of producing code that works, the presence of these vulnerabilities might compromise the security and reliability of the software that is produced, especially in applications that are sensitive to security.

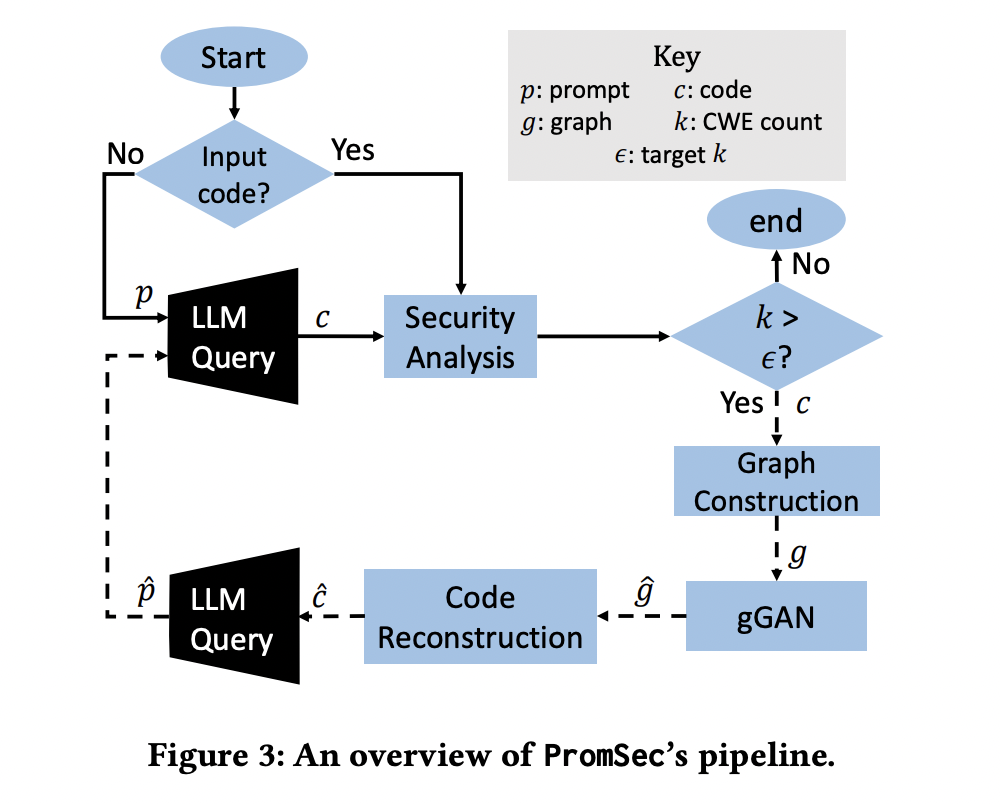

To tackle this problem, a method that can automatically refine the instructions given to LLMs is needed to ensure that the code produced is safe and works. A team of researchers from the New Jersey Institute of Technology and Qatar Computing Research Institute has introduced PromSec, a solution that has been created to address this problem, which aims at optimizing LLM prompts to generate secure and functional code. It functions by combining two essential parts, which are as follows.

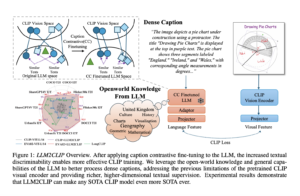

Vulnerability Removal: PromSec employs a generative adversarial graph neural network (gGAN) to find and address security flaws in the generated code. This particular methodology is intended to find and fix vulnerabilities in the code.

Interactive Loop: Between the gGAN and the LLM, PromSec establishes an iterative feedback loop. After vulnerabilities are found and fixed, the gGAN creates better prompts based on the updated code, which the LLM uses as a guide to write more secure code in subsequent iterations. As a result of the models’ interaction, the prompts are improved in terms of functionality and code security.

The application of contrastive learning within the gGAN, which enables PromSec to optimize code generation as a dual-objective issue, is one of its distinctive features. This means that PromSec reduces the amount of LLM inferences needed while also enhancing the code’s usefulness and security. Consequently, the system can generate secure and dependable code more quickly, saving time and computing power required for several iterations of code production and security analysis.

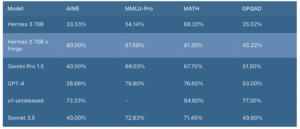

PromSec’s effectiveness has been shown through rigorous testing with datasets of Python and Java code. The outcomes have verified that PromSec considerably raises the created code’s security level while preserving its intended functionality. PromSec can fix vulnerabilities that other methodologies miss, even when compared to the most advanced techniques. PromSec also provides a significant reduction in operational expenses by minimizing the quantity of LLM queries, the duration of security analysis, and the total processing overhead.

The generalisability of PromSec is another important benefit. PromSec can create optimized prompts for one LLM that can be used for another, even using different programming languages. These prompts can fix vulnerabilities that haven’t been discovered yet, which makes PromSec a reliable option for a variety of coding contexts.

The team has summarized their primary contributions as follows.

PromSec has been introduced which is a unique method that automatically optimizes LLM prompts to produce safe source code while preserving the intended functionality of the code.

The gGAN model, or graph generative adversarial network, has been presented. This model frames the problem of correcting source code security concerns as a dual-objective optimization task, balancing code security and functionality. Using a unique contrastive loss function, the gGAN implements semantic-preserving security improvements, ensuring that the code keeps its intended functionality while being more secure.

Comprehensive studies have been conducted showing how PromSec can greatly enhance the functionality and security of code written by LLM. It has been demonstrated that the PromSec-developed optimized prompts can be applied to several programming languages, addressing a variety of common weaknesses enumerations (CWEs), and transfer between different LLMs.

In conclusion, PromSec is a major step forward in the usage of LLMs for secure code generation. It can significantly increase the reliability of LLMs for large-scale software development by mitigating the security flaws in LLM-generated code and providing a scalable, affordable solution. In order to guarantee that LLMs can be securely and consistently incorporated into practical coding techniques and, eventually, increase their application across a range of industries, this development is a great addition.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

⏩ ⏩ FREE AI WEBINAR: ‘SAM 2 for Video: How to Fine-tune On Your Data’ (Wed, Sep 25, 4:00 AM – 4:45 AM EST)

Tanya Malhotra is a final year undergrad from the University of Petroleum & Energy Studies, Dehradun, pursuing BTech in Computer Science Engineering with a specialization in Artificial Intelligence and Machine Learning.She is a Data Science enthusiast with good analytical and critical thinking, along with an ardent interest in acquiring new skills, leading groups, and managing work in an organized manner.