Prometheus-Eval and Prometheus 2: Setting New Standards in LLM Evaluation and Open-Source Innovation with State-of-the-art Evaluator Language Model

In natural language processing (NLP), researchers constantly strive to enhance language models’ capabilities, which play a crucial role in text generation, translation, and sentiment analysis. These advancements necessitate sophisticated tools and methods for evaluating these models effectively. One such innovative tool is Prometheus-Eval.

Prometheus-Eval is a repository that provides tools for training, evaluating, and using language models specialized in evaluating other language models. It includes the Prometheus-eval Python package, which offers a simple interface for evaluating instruction-response pairs. This package supports both absolute and relative grading methods, enabling comprehensive evaluations. The absolute grading method outputs a score between 1 and 5, while the relative grading method compares responses and determines the better one. The tool also includes evaluation datasets and scripts for training or fine-tuning Prometheus models on custom datasets.

The key features of Prometheus-Eval lie in its ability to simulate human judgments and proprietary LM-based evaluations. By providing a robust and transparent evaluation framework, Prometheus-Eval ensures fairness and affordability. It eliminates reliance on closed-source models for assessment and allows users to construct internal evaluation pipelines without concerns about GPT version updates. Prometheus-Eval is accessible to many users, requiring only consumer-grade GPUs for operation.

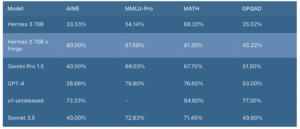

Building on the success of Prometheus-Eval, Researchers from KAIST AI, LG AI Research, Carnegie Mellon University, MIT, Allen Institute for AI, and the University of Illinois Chicago have introduced Prometheus 2, a state-of-the-art evaluator language model. Prometheus 2 offers significant improvements over its predecessor. Prometheus 2 (8x7B) supports both direct assessment (absolute grading) and pairwise ranking (relative grading) formats, enhancing the flexibility and accuracy of evaluations.

Prometheus 2 shows a Pearson correlation of 0.6 to 0.7 with GPT-4-1106 on a 5-point Likert scale across multiple direct assessment benchmarks, including VicunaBench, MT-Bench, and FLASK. Additionally, it scores a 72% to 85% agreement with human judgments across multiple pairwise ranking benchmarks, such as HHH Alignment, MT Bench Human Judgment, and Auto-J Eval. These results highlight the model’s high accuracy and consistency in evaluating language models.

Prometheus 2 (8x7B) is designed to be accessible and efficient. It requires only 16 GB of VRAM, making it suitable for running on consumer GPUs. This accessibility broadens its usability, allowing more researchers to benefit from its advanced evaluation capabilities without expensive hardware. Prometheus 2 (7B), a lighter version of the 8x7B model, achieves at least 80% of its larger counterpart’s evaluation statistics or performances. This makes it a highly efficient tool, outperforming models like Llama-2-70B and being on par with Mixtral-8x7B.

The Prometheus-Eval package offers a straightforward interface for evaluating instruction-response pairs using Prometheus 2. Users can easily switch between absolute and relative grading modes by providing different input prompt formats and system prompts. The tool allows for integrating various datasets, ensuring comprehensive and detailed evaluations. Batch grading is also supported, providing more than a tenfold speedup for multiple responses, making it highly efficient for large-scale evaluations.

In conclusion, Prometheus-Eval and Prometheus 2 address the critical need for reliable and transparent evaluation tools in NLP. Prometheus-Eval offers a robust framework for evaluating language models, ensuring fairness and accessibility. Prometheus 2 builds on this foundation, providing advanced evaluation capabilities with impressive performance metrics. Researchers can now assess their models more confidently, knowing they have a comprehensive and accessible tool.

Sources

![]()

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.