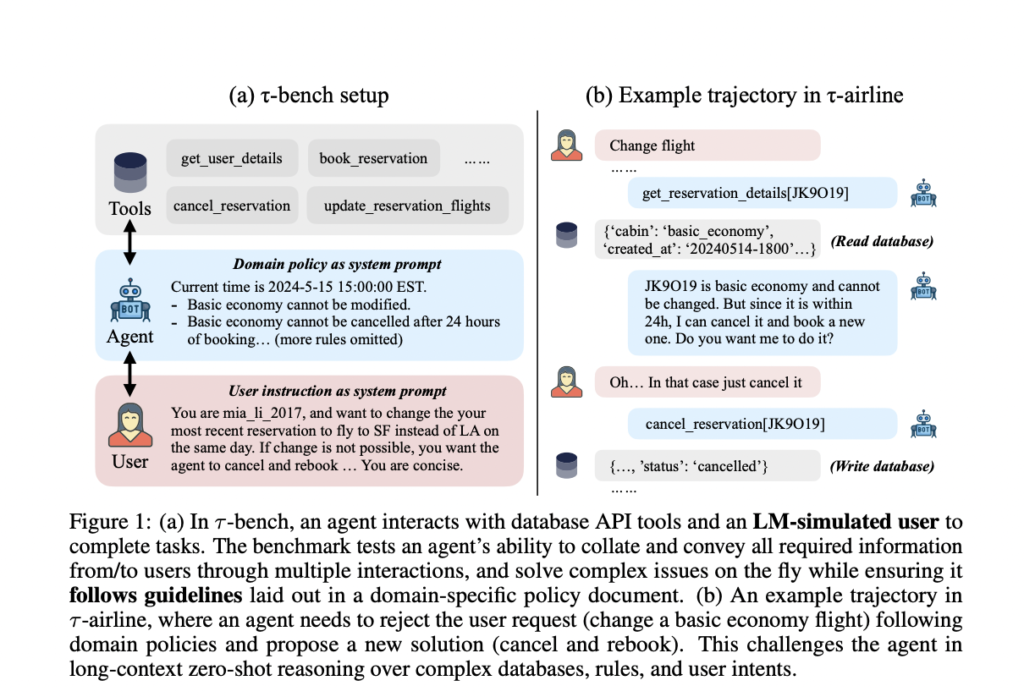

τ-bench: A New Benchmark to Evaluate AI Agents’ Performance and Reliability in Real-World Settings with Dynamic User and Tool Interaction

Current benchmarks for language agents fall short in assessing their ability to interact with humans or adhere to complex, domain-specific rules—essential for practical deployment. Real-world applications require agents to seamlessly engage with users and APIs over extended interactions, follow detailed policies, and maintain consistent and reliable performance. For example, an airline booking agent must communicate with users to change reservations, adhere to airline policies, and navigate reservation systems accurately. However, existing benchmarks primarily focus on simplified, autonomous tasks without human interaction or rule adherence, limiting their relevance for real-world scenarios.

Researchers from Sierra introduced τ-bench, a new benchmark designed to emulate dynamic conversations between a language agent and a simulated human user, incorporating domain-specific APIs and policy guidelines. This benchmark evaluates an agent’s ability to interact consistently and reliably, comparing the final database state after a conversation to the expected goal state. Experiments in customer service domains like retail and airlines show that advanced agents like GPT-4o succeed in less than 50% of tasks and exhibit inconsistent behavior across trials. τ-bench aims to drive the development of more robust agents capable of complex reasoning and consistent rule-following in real-world interactions.

Most current language agent benchmarks evaluate conversational skills or tool-use capabilities separately. In contrast, τ-bench combines both under realistic conditions, assessing agents’ interactions with users and adherence to domain-specific policies. Existing benchmarks, like the Berkeley Function Calling Leaderboard and ToolBench, focus on evaluating function calls from APIs but involve single-step interactions. Task-oriented dialogue benchmarks either rely on static datasets or rule-based user simulators. τ-bench uses advanced language models to simulate realistic, long-context conversations, providing a robust test of agent consistency. Unlike previous works, τ-bench emphasizes the reliability of agents in dynamic, multi-step interactions typical of real-world applications.

τ-bench is a benchmark designed to evaluate language agents through realistic, multi-step interactions involving databases, APIs, and simulated user conversations. Each task is modeled as a partially observable Markov decision process, requiring agents to follow domain-specific policies. The framework includes diverse databases, APIs, and user simulations to test agents’ capabilities in retail and airline domains. Evaluation hinges on the accuracy of database states and user responses. Tasks are generated using manual design and language models, ensuring only one possible correct outcome. τ-bench emphasizes complex, open-ended tasks and consistent rule-following, promoting modularity and extensibility for future domains.

The study benchmarked state-of-the-art language models for task-oriented agents using OpenAI, Anthropic, Google, Mistral, and AnyScale APIs. The evaluation focused on function calling (FC) methods and found that GPT-4 performed best overall, particularly in retail and airline domains. FC methods outperformed text-based approaches like ReAct. However, models needed help with complex tasks, such as database reasoning, following domain-specific rules, and handling compound requests. GPT-4’s reliability decreased with repeated trials, indicating challenges in consistency and robustness. Cost analysis revealed significant expenses due to extensive prompts, suggesting areas for efficiency improvements.

In conclusion, τ-bench is a benchmark designed to evaluate agents’ reliability in dynamic, real-world interactions. Despite leveraging state-of-the-art language models, results reveal significant challenges: agents often struggle with consistent rule-following and handling diverse user instructions. Improvements can focus on enhancing user simulations, refining domain policies, and developing more robust evaluation metrics. Future work should also address biases in data curation and explore better long-term information tracking and context focus. Solving these challenges is crucial for advancing real-world automation and improving human-agent interactions.

Check out the Paper and Details. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

🚀 Create, edit, and augment tabular data with the first compound AI system, Gretel Navigator, now generally available! [Advertisement]

Sana Hassan, a consulting intern at Marktechpost and dual-degree student at IIT Madras, is passionate about applying technology and AI to address real-world challenges. With a keen interest in solving practical problems, he brings a fresh perspective to the intersection of AI and real-life solutions.